데이터 엔지니어(두번째 이야기)

1. Importing Data from Flat Files

Python 라이브러리 판다스는 재무 정량 분석을 위해 2008년 Wes McKinney(웨스 맥키니)에 의해 개발되었지만 현재는 많은 분야에 사용되고 있다. 데이터를 쉽게 로드하고 조작하고 많은 분석 및 시각화 라이브러리와 통합된다. 판다스의 데이터프레임은 2차원 데이터 구조이다.

플랫 파일 특징

- Simple, easy to produce format

- data stored as plain text(no formating)

- one row per line

- values for different fields are separated by a delimiter

- most common flat file type : comma-separated values

- one pandas function to load them all : read_csv()

기초적인 pandas read로 데이터 읽기

# Import pandas as pd

import pandas as pd

# Read the CSV and assign it to the variable data

data = pd.read_csv('vt_tax_data_2016.csv')

# View the first few lines of data

print(data.head())sep 구분자로 read하고 groupby로 묶고 N1 칼럼 합 구해서 plot하기

# Import pandas with the alias pd

import pandas as pd

# Load TSV using the sep keyword argument to set delimiter

data = pd.read_csv('vt_tax_data_2016.tsv', sep = "\t")

print(data)

# Plot the total number of tax returns by income group

counts = data.groupby("agi_stub").N1.sum()

counts.plot.bar()

plt.show()

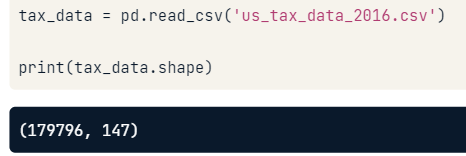

shape keyword (행, 열)

usecols keyword (칼럼명 또는 칼럼번호로 지정열만 로드)

nrows keyword (출력 행 수 지정)

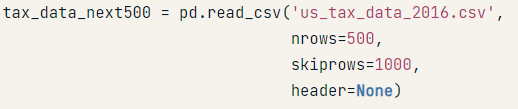

skiprows로 건너뛸 행 수를 적을 수 있다. header에 None 값이면 헤더 행이 없음을 의미한다. names 키워드로 칼럼명을 지정해줄 수도 있다.

# Create list of columns to use

cols = ['zipcode','agi_stub','mars1','MARS2','NUMDEP']

# Create data frame from csv using only selected columns

data = pd.read_csv("vt_tax_data_2016.csv", usecols=cols)

# View counts of dependents and tax returns by income level

print(data.groupby("agi_stub").sum())# Create data frame of next 500 rows with labeled columns

vt_data_next500 = pd.read_csv("vt_tax_data_2016.csv",

nrows = 500,

skiprows = 500,

header = None,

names = vt_data_first500)

# View the Vermont data frames to confirm they're different

print(vt_data_first500.head())

print(vt_data_next500.head())

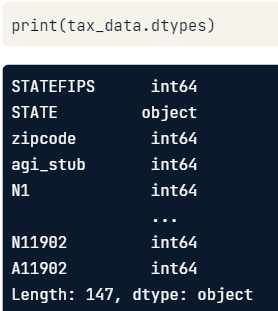

dtypes

비표준 데이터 유형은 인용문으로 전달되어야 한다.

결측값이 포함된 행

에러가 있는 라인

error_bad_lines = False : 오류가 있는 라인을 건너 뜀

warn_bad_lines = True : 구분할 수 없는 행을 건너뛰었을 경우 알림 표시

date type 읽기 실습

# Create dict specifying data types for agi_stub and zipcode

data_types = {'agi_stub':'category',

'zipcode':str}

# Load csv using dtype to set correct data types

data = pd.read_csv("vt_tax_data_2016.csv", dtype=data_types)

# Print data types of resulting frame

print(data.dtypes.head())

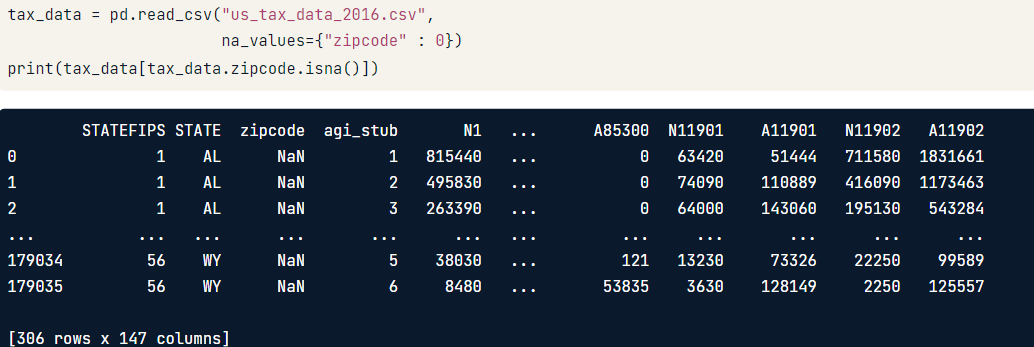

결측치 실습

# Create dict specifying that 0s in zipcode are NA values

null_values = {'zipcode':0}

# Load csv using na_values keyword argument

data = pd.read_csv("vt_tax_data_2016.csv",

na_values=null_values)

# View rows with NA ZIP codes

print(data[data.zipcode.isna()])

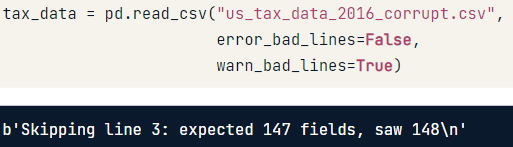

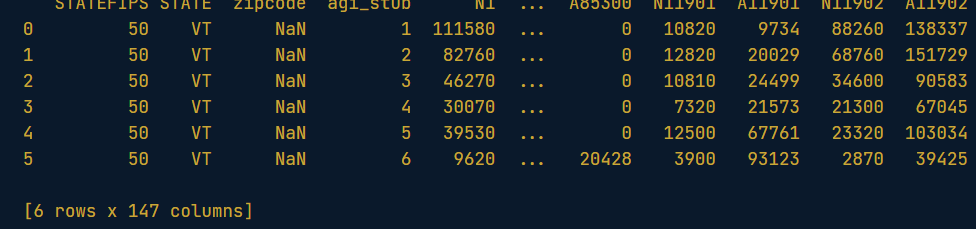

에러 행 실습

try:

# Set warn_bad_lines to issue warnings about bad records

data = pd.read_csv("vt_tax_data_2016_corrupt.csv",

error_bad_lines=False,

warn_bad_lines=True)

# View first 5 records

print(data.head())

except pd.io.common.CParserError:

print("Your data contained rows that could not be parsed.")

2. Importing Data From Excel Files

# Load pandas as pd

import pandas as pd

# Read spreadsheet and assign it to survey_responses

survey_responses = pd.read_excel('fcc_survey.xlsx')

# View the head of the data frame

print(survey_responses.head())문자열을 만들 때 :로 열 범위를 지정하고 쉼표로 구분된 열/범위 목록을 지정할 수 있다.

ex) "A:D, H"는 열 A에서 D까지, 더하기 열 H를 가져옴.

열은 문자열에서 임의의 순서로 지정할 수 있다.

건너뛰기는 스프레드시트의 시작 부분에서 건너뛸 행 번호 목록 또는 건너뛸 행 수를 선택할 수 있다.

# Create string of lettered columns to load

col_string = "AD, AW:BA"

# Load data with skiprows and usecols set

survey_responses = pd.read_excel("fcc_survey_headers.xlsx",

skiprows=2,

usecols=col_string)

# View the names of the columns selected

print(survey_responses.columns)sheet_name으로 읽기

# Create df from second worksheet by referencing its name

responses_2017 = pd.read_excel("fcc_survey.xlsx",

sheet_name='2017')

# Graph where people would like to get a developer job

job_prefs = responses_2017.groupby("JobPref").JobPref.count()

job_prefs.plot.barh()

plt.show()sheet_name=None 전부 다 읽기

# Load all sheets in the Excel file

all_survey_data = pd.read_excel("fcc_survey.xlsx",

sheet_name=None)

# View the sheet names in all_survey_data

print(all_survey_data.keys())DataFrame 만들고 values for문 돌리기

# Create an empty data frame

all_responses = pd.DataFrame()

# Set up for loop to iterate through values in responses

for df in responses.values():

# Print the number of rows being added

print("Adding {} rows".format(df.shape[0]))

# Append df to all_responses, assign result

all_responses = all_responses.append(df)

# Graph employment statuses in sample

counts = all_responses.groupby("EmploymentStatus").EmploymentStatus.count()

counts.plot.barh()

plt.show()

custom null값 변경하는 방법 및 읽으면서 dtype 설정

실습

# Set dtype to load appropriate column(s) as Boolean data

survey_data = pd.read_excel("fcc_survey_subset.xlsx",

dtype={"HasDebt": bool})

# View financial burdens by Boolean group

print(survey_data.groupby("HasDebt").sum())# Load file with Yes as a True value and No as a False value

survey_subset = pd.read_excel("fcc_survey_yn_data.xlsx",

dtype={"HasDebt": bool,

"AttendedBootCampYesNo": bool},

true_values=['Yes'],

false_values=['No'])

# View the data

print(survey_subset.head())

parse_dates 실습

# Load file, with Part1StartTime parsed as datetime data

survey_data = pd.read_excel("fcc_survey.xlsx",

parse_dates=['Part1StartTime'])

# Print first few values of Part1StartTime

print(survey_data.Part1StartTime.head())# Create dict of columns to combine into new datetime column

datetime_cols = {"Part2Start": ["Part2StartDate","Part2StartTime"]}

# Load file, supplying the dict to parse_dates

survey_data = pd.read_excel("fcc_survey_dts.xlsx",

parse_dates=datetime_cols)

# View summary statistics about Part2Start

print(survey_data.Part2Start.describe())

format으로 형식 지정

# Parse datetimes and assign result back to Part2EndTime

survey_data["Part2EndTime"] = pd.to_datetime(survey_data["Part2EndTime"],

format="%m%d%Y %H:%M:%S")3. Importing Data from Databases

공통 관계형 데이터베이스

일반적인 관계형 데이터베이스에는 Microsoft SQL Server, Oracle, Postgre가 포함

SQL 및 SQLite를 사용 다른 데이터베이스와 달리 SQLite 데이터베이스는 CSV 및 Excel 파일과 마찬가지로 일반 자체 포함 컴퓨터 파일로 저장되므로 데이터 공유에 적합

# Import sqlalchemy's create_engine() function

from sqlalchemy import create_engine

# Create the database engine

engine = create_engine("sqlite:///data.db")

# View the tables in the database

print(engine.table_names())'sql 불러오기

# Load libraries

import pandas as pd

from sqlalchemy import create_engine

# Create the database engine

engine = create_engine('sqlite:///data.db')

# Load hpd311calls without any SQL

hpd_calls = pd.read_sql("Select * from hpd311calls",engine)

# View the first few rows of data

print(hpd_calls.head())# Create the database engine

engine = create_engine("sqlite:///data.db")

# Create a SQL query to load the entire weather table

query = """

SELECT *

FROM weather;

"""

# Load weather with the SQL query

weather = pd.read_sql(query, engine)

# View the first few rows of data

print(weather.head())sql문 실습

# Create database engine for data.db

engine = create_engine("sqlite:///data.db")

# Write query to get date, tmax, and tmin from weather

query = """

SELECT date,

tmax,

tmin

FROM weather;

"""

# Make a data frame by passing query and engine to read_sql()

temperatures = pd.read_sql(query,engine)

# View the resulting data frame

print(temperatures)# Create query to get hpd311calls records about safety

query = """

select *

from hpd311calls

where complaint_type = 'SAFETY';

"""

# Query the database and assign result to safety_calls

safety_calls = pd.read_sql(query,engine)

# Graph the number of safety calls by borough

call_counts = safety_calls.groupby('borough').unique_key.count()

call_counts.plot.barh()

plt.show()# Create query for records with max temps <= 32 or snow >= 1

query = """

SELECT *

FROM weather

where tmax

<= 32 or snow >= 1;

"""

# Query database and assign result to wintry_days

wintry_days = pd.read_sql(query,engine)

# View summary stats about the temperatures

print(wintry_days.describe())

더 많은 sql문 실습

# Create query for unique combinations of borough and complaint_type

query = """

SELECT DISTINCT borough,

complaint_type

FROM hpd311calls;

"""

# Load results of query to a data frame

issues_and_boros = pd.read_sql(query,engine)

# Check assumption about issues and boroughs

print(issues_and_boros)# Create query to get call counts by complaint_type

query = """

select complaint_type,

count(*)

FROM hpd311calls

group by complaint_type;

"""

# Create data frame of call counts by issue

calls_by_issue = pd.read_sql(query, engine)

# Graph the number of calls for each housing issue

calls_by_issue.plot.barh(x="complaint_type")

plt.show()

# Create a query to get month and max tmax by month

query = """

SELECT month,

MAX(tmax)

FROM weather

group by month;"""

# Get data frame of monthly weather stats

weather_by_month = pd.read_sql(query, engine)

# View weather stats by month

print(weather_by_month)# Create query to get temperature and precipitation by month

query = """

SELECT month,

MAX(tmax),

MIN(tmin),

SUM(prcp)

FROM weather

GROUP BY month;

"""

# Get data frame of monthly weather stats

weather_by_month = pd.read_sql(query, engine)

# View weather stats by month

print(weather_by_month)

Join 실습

# Query to join weather to call records by date columns

query = """

SELECT *

FROM hpd311calls

JOIN weather

ON hpd311calls.created_date = weather.date;

"""

# Create data frame of joined tables

calls_with_weather = pd.read_sql(query,engine)

# View the data frame to make sure all columns were joined

print(calls_with_weather.head())# Query to get hpd311calls and precipitation values

query = """

SELECT hpd311calls.*, weather.prcp

FROM hpd311calls

JOIN weather

ON hpd311calls.created_date = weather.date;"""

# Load query results into the leak_calls data frame

leak_calls = pd.read_sql(query, engine)

# View the data frame

print(leak_calls.head())# Query to get water leak calls and daily precipitation

query = """

SELECT hpd311calls.*, weather.prcp

FROM hpd311calls

JOIN weather

ON hpd311calls.created_date = weather.date

WHERE hpd311calls.complaint_type = 'WATER LEAK';"""

# Load query results into the leak_calls data frame

leak_calls = pd.read_sql(query, engine)

# View the data frame

print(leak_calls.head())# Query to get heat/hot water call counts by created_date

query = """

SELECT hpd311calls.created_date,

COUNT(*)

FROM hpd311calls

WHERE hpd311calls.complaint_type = 'HEAT/HOT WATER'

GROUP BY hpd311calls.created_date;

"""

# Query database and save results as df

df = pd.read_sql(query,engine)

# View first 5 records

print(df.head())# Modify query to join tmax and tmin from weather by date

query = """

SELECT hpd311calls.created_date,

COUNT(*),

weather.tmax,

weather.tmin

FROM hpd311calls

JOIN weather

ON hpd311calls.created_date = weather.date

WHERE hpd311calls.complaint_type = 'HEAT/HOT WATER'

GROUP BY hpd311calls.created_date;

"""

# Query database and save results as df

df = pd.read_sql(query, engine)

# View first 5 records

print(df.head())

4.Importing JSON Data and Working with APIs

JSON은 웹을 통해 데이터를 전송하는 일반적인 형식이다. 데이터 프레임과 달리 JSON 데이터는 표 형식이 아닙니다. 따라서 레코드에 대한 값이 없는 경우 속성을 null 값으로 저장하는 대신 생략할 수 있습니다. 다시 말해, 레코드가 모두 동일한 속성 집합을 가질 필요는 없습니다. 대신 데이터는 개체의 모음으로 구성됩니다.개체는 Python 사전과 유사합니다. 개체는 중괄호로 둘러싸여 있고 속성-값 쌍을 포함합니다. JSON의 마지막 특징은 중첩될 수 있다는 것입니다. 값 자체는 객체 또는 객체 목록일 수 있습니다.

# Load pandas as pd

import pandas as pd

# Load the daily report to a data frame

pop_in_shelters = pd.read_json("dhs_daily_report.json")

# View summary stats about pop_in_shelters

print(pop_in_shelters.describe())

orient split 구분

try:

# Load the JSON with orient specified

df = pd.read_json("dhs_report_reformatted.json",

orient='split')

# Plot total population in shelters over time

df["date_of_census"] = pd.to_datetime(df["date_of_census"])

df.plot(x="date_of_census",

y="total_individuals_in_shelter")

plt.show()

except ValueError:

print("pandas could not parse the JSON.")

API 불러오기 연습

api_url = "https://api.yelp.com/v3/businesses/search"

# Get data about NYC cafes from the Yelp API

response = requests.get(api_url,

headers=headers,

params=params)

# Extract JSON data from the response

data = response.json()

# Load data to a data frame

cafes = pd.DataFrame(data["businesses"])

# View the data's dtypes

print(cafes.dtypes)# Create dictionary to query API for cafes in NYC

parameters = {"term":"cafe",

"location":"NYC"}

# Query the Yelp API with headers and params set

response = requests.get(api_url,

params=parameters,

headers=headers)

# Extract JSON data from response

data = response.json()

# Load "businesses" values to a data frame and print head

cafes = pd.DataFrame(data["businesses"])

print(cafes.head())# Create dictionary that passes Authorization and key string

headers = {"Authorization": "Bearer {}".format(api_key)}

# Query the Yelp API with headers and params set

response = requests.get(api_url,params=params, headers = headers)

# Extract JSON data from response

data = response.json()

# Load "businesses" values to a data frame and print names

cafes = pd.DataFrame(data["businesses"])

print(cafes.name)

중첩된 JSON

JSON에는 특성-값 쌍이 있는 개체가 포함되어 있습니다. JSON은 값 자체가 개체일 때 중첩됩니다.

# Load json_normalize()

from pandas.io.json import json_normalize

# Isolate the JSON data from the API response

data = response.json()

# Flatten business data into a data frame, replace separator

cafes = json_normalize(data["businesses"],

sep='_')

# View data

print(cafes.head())# Load other business attributes and set meta prefix

flat_cafes = json_normalize(data["businesses"],

sep="_",

record_path="categories",

meta=["name",

"alias",

"rating",

["coordinates", "latitude"],

["coordinates", "longitude"]],

meta_prefix="biz_")

# View the data

print(flat_cafes.head())

json params 이어붙이기 append

# Add an offset parameter to get cafes 51-100

params = {"term": "cafe",

"location": "NYC",

"sort_by": "rating",

"limit": 50,

"offset":50}

result = requests.get(api_url, headers=headers, params=params)

next_50_cafes = json_normalize(result.json()["businesses"])

# Append the results, setting ignore_index to renumber rows

cafes = top_50_cafes.append(next_50_cafes, ignore_index = True)

# Print shape of cafes

print(cafes.shape)# Merge crosswalk into cafes on their zip code fields

cafes_with_pumas = cafes.merge(crosswalk,

left_on="location_zip_code",

right_on="zipcode")

# Merge pop_data into cafes_with_pumas on puma field

cafes_with_pop = cafes_with_pumas.merge(pop_data, on="puma")

# View the data

print(cafes_with_pop.head())